The ‘Godfather of AI’ Fears Machines May Soon Be Smarter Than Humans

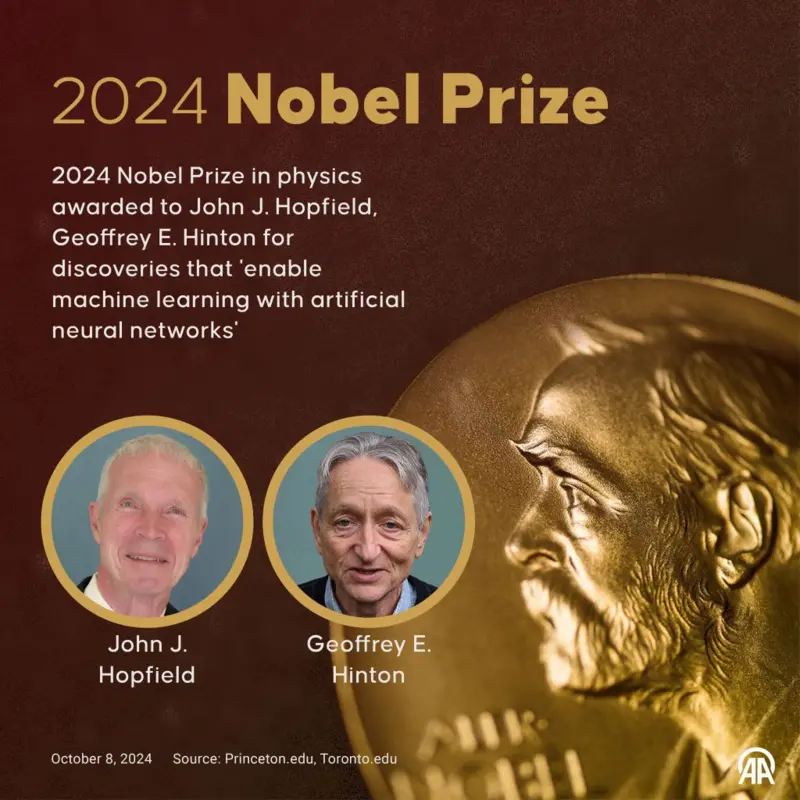

Scientists Geoffrey Hinton and John Hopfield have won the Nobel Prize in Physics for their contributions to the field of machine learning.

British-Canadian academic Geoffrey Hinton, often referred to as the ‘Godfather of Artificial Intelligence,’ expressed surprise upon winning the award.

In 2023, Hinton resigned from Google, where he had long warned that machines could soon become more intelligent than humans.

The Royal Swedish Academy of Sciences announced the award during a press conference in Stockholm, honoring the two scientists for their revolutionary work.

91-year-old John Hopfield is a professor at Princeton University, USA, while 76-year-old Hinton teaches at the University of Toronto, Canada.

Machine learning, which is considered the foundation of artificial intelligence, trains machines to generate information. Many of today’s technologies, such as internet search and photo editing on our phones, are possible due to machine learning.

Shortly after the announcement, Hinton spoke with the Academy, saying, “I didn’t know this was coming. I’m very surprised.”

The Academy highlighted the significant applications of the work of these two scientists, including climate models, solar cells, and medical imaging technologies.

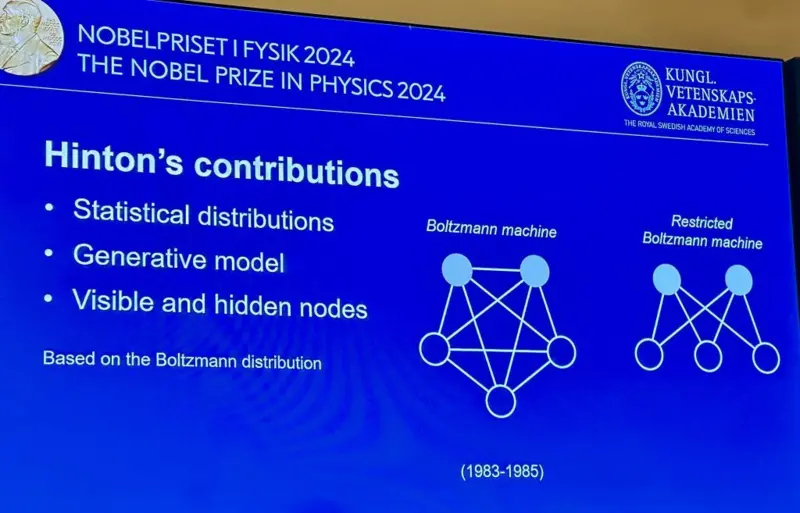

Hinton’s research on neural networks laid the groundwork for modern AI systems like ChatGPT. Neural networks in AI mimic the way the human brain processes information and learns from experience, a process known as ‘deep learning.’ According to Hinton, his work on neural networks was revolutionary.

He explained, “It was like the Industrial Revolution, but instead of enhancing our physical abilities, it was aimed at improving our mental capabilities.”

When asked if he had any regrets about his work, Hinton expressed his concerns but stated that if given the chance to go back, he would still pursue the same path. However, he also voiced his fears: “I’m concerned that we could develop systems more intelligent than us, which could end up controlling everything.”

Hinton mentioned that he now uses ChatGPT-4 to handle many of his tasks but acknowledges that the application doesn’t always provide accurate answers.

On the other hand, John Hopfield developed a network that stores data and reconstructs patterns, which is based on the concept of ‘atomic spin’ in physics. This network mimics the way the human brain stores and recalls information, allowing it to complete incomplete patterns, much like the human mind does.

The Nobel Committee noted that the work of both scientists has become integral to our daily lives, including facial recognition and language translation technologies.

However, Ellen Manz, the head of the Nobel Committee for Physics, stated, “The rapid progress in physics has also sparked concerns about our future.”

The two scientists will share an award of 11 million Swedish kronor (approximately $1.64 million).

Why Did Hinton Resign from Google?

In May of last year, Geoffrey Hinton announced his resignation from Google, telling the BBC that some of the risks posed by AI chatbots were “quite scary.”

He stated, “From what I understand, they’re not smarter than us yet, but I believe they could be soon.”

Hinton acknowledged that AI holds tremendous potential for humanity but expressed concern that without clear regulations and boundaries, the current pace of development is alarming.

In a statement to The New York Times, Hinton shared his regret, fearing that some “bad actors” could use AI for harmful purposes.

The computer scientist warned the BBC that AI chatbots might soon surpass the knowledge level of the human brain.

He said, “What we’re seeing now is that GPT-4 surpasses the knowledge of any one individual. In terms of explanation, it’s not quite there yet, but it can do so to some extent.”

“Given the rate of progress, we expect things to improve very quickly, so we need to be concerned about this.”

In The New York Times article, Dr. Hinton referred to “bad actors” who could use AI for “bad things.”

When asked by the BBC about this, he responded, “This is just the worst-case scenario, a nightmare situation.”

“For example, you could imagine a bad person like [Russian President Vladimir] Putin using robots to set his goals.”

The scientist warned that this could lead to the achievement of sub-goals such as “I need to gain more power.”